Designing AI: Seeing the big picture

A mental model for framing AI as simply automation

“Automation, automation, automation, automation, automation, automation, automation, prepare for mutation.”

— Stu Mackenzie (KGLW), Automation

Welcome to the first in a series of posts where I attempt to demystify the practice of designing AI, designing for AI, or simply understanding AI. My hope is to equip you with the core mental models, language, and framing you’ll need to have productive conversations with your product and engineering counterparts.

If you find this useful, please let me know in the comments below — or just hit reply in your email. Also, consider sharing with another designer interested in designing for AI. And if you want to read these as they are published, consider subscribing for free.

What is Artificial Intelligence and why should you care

Googling the question, “What is Artificial Intelligence?” today will get you tons of answers. For example, my first hit is from IBM’s website:

Artificial intelligence leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind

That’s actually not a bad definition. But why should we, as designers, care? Let’s ask our new friend ChatGPT, shall we?

Me:

Why is Artificial Intelligence important to designers?

ChatGPT:

Artificial Intelligence (AI) is important to designers for several reasons, as it can enhance and transform various aspects of the design process. Here are some key reasons why AI is significant for designers:

Automation of Repetitive Tasks: AI can automate routine and repetitive tasks, such as data analysis, image processing, and layout design. This allows designers to focus more on creative and strategic aspects of their work, leading to increased productivity and efficiency.

Personalization and User Experience: AI can analyze user data to understand preferences, behaviors, and patterns. Designers can leverage this information to create personalized user experiences, tailoring interfaces and content to individual users, thereby improving engagement and satisfaction.

Generative Design: AI can be used for generative design, where algorithms explore numerous design possibilities based on specified parameters and constraints. This can lead to innovative and optimized design solutions that may not have been considered through traditional design processes.

Predictive Analytics: Designers can use AI to analyze trends and predict future design preferences. This can be particularly valuable in fields such as fashion, graphic design, and product design, where staying ahead of trends is crucial.

Efficient Design Collaboration: AI-powered tools facilitate collaboration among designers by streamlining communication, version control, and feedback processes. This helps teams work more efficiently and ensures that everyone is on the same page during the design process.

Enhanced Creativity: AI tools can act as creative assistants by suggesting design elements, color schemes, or layout options. While human intuition and creativity are irreplaceable, AI can provide inspiration and help designers explore new possibilities.

Accessibility and Inclusivity: AI can contribute to making designs more accessible and inclusive. For instance, AI algorithms can help identify and address potential accessibility issues, ensuring that designs are usable by a wider audience, including individuals with disabilities.

Augmented Reality (AR) and Virtual Reality (VR): AI plays a significant role in AR and VR applications, allowing designers to create immersive and interactive experiences. This is particularly relevant in industries like gaming, architecture, and simulation, where realistic and dynamic environments are crucial.

Data-Driven Decision Making: AI can analyze large datasets to extract valuable insights, helping designers make informed decisions. Whether it's understanding user behavior or evaluating the performance of design elements, data-driven insights can lead to more effective and successful designs.

Adaptive Design: AI can enable adaptive design solutions that respond dynamically to user interactions and environmental conditions. This flexibility ensures that designs remain effective across various devices, platforms, and contexts.

In summary, AI empowers designers by automating tasks, providing valuable insights, fostering creativity, and enhancing the overall design process. As technology continues to advance, the collaboration between AI and designers is likely to bring about even more innovative and efficient design solutions.

Ok, well, that was a pretty good and robust answer. But the most important word used in that massive response is “Automation.” Automation is a good tool in the designer’s toolkit because when we understand the progress our users are trying to make in their life, automation is a powerful way of making our solutions easier to use, cheaper to access, or more enjoyable overall.

Hot take: “Artificial Intelligence” has become used as a fancy way of saying “automation.”

Yes, I know AI is an entire field of study. Yes, it’s more complicated than just automation. But almost every “AI” feature I’ve ever worked on can be reduced to the automation of a task. A task previously done by a human or a task neither a human or a machine could do before.

So as a designer tasked with finding ways to “leverage AI” in a product, a very easy way to start is by thinking about all of the tasks users do today that would be made easier — or even obsolete — with more automation.

BUT… by automation, I do not always mean the full removal of the human. Automation exists on a spectrum. Here’s how I frame automation in my own design practice:

Mental Model: The Automation Spectrum

Humans have been automating things for centuries. The most obvious period was the Industrial Revolution, when we built giant machines like the power loom. In my lifetime, we’ve been using microprocessors and software to take advantage of the computational power necessary for more sophisticated automation.

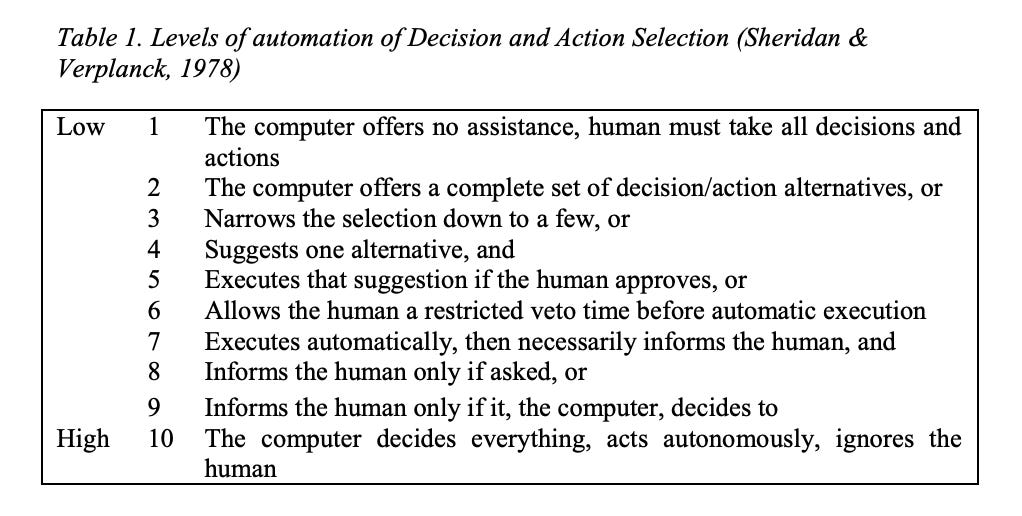

As you can see, automation happens on a spectrum. Early research on human-computer interaction (Sheridan & Verplanck, 1978) defined 10-stages of automation.

Later research added roles a computer or human may perform. Roles like: Monitoring, Generating, Selecting, and Implementing. (Endsley and Kaber, 1999)

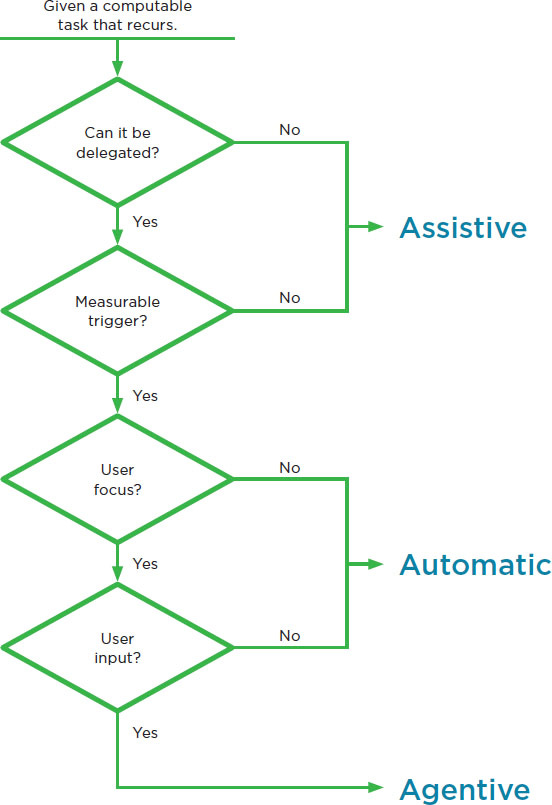

Both of these spectrums are helpful to see an overall picture of who/what is doing the work. The most useful visualization of the Automation Spectrum I continue to come back to again and again in my design practice is from Chris Noessel. I first discovered the model in a talk he gave. He describes four main types of automation: Manual, Assistive, Agentive, and Automated. In his talks, he has used this simple illustration (I’ve recreated it below, but you can watch Chris present the framework in 2019 at the From Business to Buttons design conference):

The spectrum is a range, from fully manual (a human is doing the work) to fully automated (a machine is doing all the work). The tricky parts are the middle two. What is the difference between an Assistant and an Agent? The more you think about it, the more complex it gets, but luckily Chris has spent many hours teasing out the nuance.

Assistants vs. Agents

In his 2017 book Designing Agentive Technology: AI That Works for People, Chris describes the differences like this:

I think an assistant should assist you with a task, and an agent takes agency and does things for you. So “agent” and “agentive” are the right terms for what I’m talking about.

[...]

If you are a distinguished, long-time student of human-computer interaction, you will note similar themes from the study of automation and what I’m describing. But where automation has as its goal the removal of the human from the system, agentive technology is explicitly in service to a human. An agent might have some automated components, but the intentions of the two fields of study are distinct.

As a counter to the argument of, “Wait… isn’t every technology an agent?” he explains using the example of a light switch:

For example, I can design a light switch when I think of it as a product, subject to industrial design decisions. But I can design a better light switch when I think of it as a problem that can be solved either manually with a switch or agentively with a motion detector or a camera with sophisticated image processing behind it. And that’s where the real power of the concept comes from. Because as we continue to evolve this skin of technology that increasingly covers both our biology and the world, we don’t want it to add to people’s burdens. We want to alleviate them and empower people to get done what needs to be done, even if we don’t want to do it. And for that, we need agents.

He follows this by offering a useful flow diagram (see below) to consider the differences between Agents and Assistants:

He concludes this chapter in the book with a useful recap about Agents:

Recap: Agents Are Persistent, Background Assistants

Far from being a one-off invention in the form of the thermostat, agentive technologies are appearing all around us, for many long-standing human problems. We can see them if we train ourselves to understand how they’re different.

They are

Software that persists.

Watching a data stream (or many) for triggers.

Performing a task for a user according to their goals and preferences.

They are not

Tech that assists a user with the performance of a task. That’s assistive tech.

Conversational “agents,” which are properly thought of as assistants.

Robots, the software for which is tightly coupled to the hardware. An agent may embody a robot, and a robot may operate as an agent.

Automation, in which the human is incidental or minimized.

They are different in that

A valet is the model.

Design focuses on easy setup and informative touchpoints.

When it’s working, it’s most often out of sight.

Touchpoints require conscious attention and consideration.

The goals of touchpoints are information, course correction, and helping the agent keep on track.

A final dimension of agents I’ve found useful is a concept Chris has spoken about since the release of the book. He says agents give users “Post-Attention Value,” the way an actor's agent continues to look for jobs for their client even when they're not together. You will know you’re building an agent instead of an assistant because it’s delivering value even when the user isn’t paying attention. For example, when you ask ChatGPT a question, it gives you an answer. When you walk away, ChatGPT is not working in the background to monitor for better answers and send you an email when it finds one. It’s only assisting you in that moment.

A final note about automation

Not all automation is good. Some tasks create delight and joy in their lack of automation. For example, curating a music playlist is a delightful, fun activity. If Spotify completely automated away the entire process it would result in a worse experience for some people. So before adding automation to your product, make sure it adds value.

Putting the Automation Spectrum into practice

Just like any human-centered design effort, most of the upfront work will be in defining the problem we need to solve for people. Step one is always customer research to identify the problem. Next is investigating how important it is to solve the problem. It’s during this phase of the project I’ll use the Automation Spectrum to map the features we’ve already built. This gives us, as a team, context to discuss what type of automation might help solve the identified problem.

As an example, below I’ve mapped four Spotify features onto the spectrum:

Making a playlist is a Manual process today. You add a title, songs, and a description, but nothing changes unless you make the change.

At the bottom of every playlist is a list of recommended songs. This acts as an Assistant, adapting and recommending songs for the playlist as it grows. It doesn’t have agency because songs only get added to the playlist manually by the user.

Discover Weekly, on the other hand, looks at all of your past listening every week, finds similar music you haven’t played on Spotify, and refreshes automatically. In addition, you can like or remove songs and your feedback gets incorporated into the next playlist refresh. Discover Weekly is therefore an Agent, following your instructions and taking action on your behalf even while you’re not actively using the app. You get the post-attention value Chris identified.

Similarly, Niche Mixes refresh automatically and use personalization to tailor the playlist to each and every one of the 500MM+ users on Spotify. The difference is the user has no control over how songs are generated or when or by what dimension. Everything is automatic, happening in the background.

Creating a feature map is a really easy and helpful way to see if we’re underdelivering somewhere along the Automation Spectrum.

Give this a try yourself. It’s a handy way to discuss competitor features, your own features, or even new feature ideas. Bonus: It’s also easy to draw on a whiteboard.

Be the facilitator

The next time you’re in a meeting where people begin talking about AI, steer the conversation into what matters most — your customers and/or users’ needs. Help them see that “AI” is just a fancy framing for different amounts of automation and guide the discussion to tangible and productive discussions around value. You can do this by asking questions like:

What do we know about how people do this task today?

What is the problem to which AI unlocks solutions that weren't possible before?

How might we map people’s existing workflow to uncover areas of opportunity?

How would automation make this experience better?

What amount of automation is needed?

Do people want this automated away or do they enjoy some part or all of the process?

What are the drawbacks of automation and how might we mitigate the downsides?

I hope this new framing helps you have more productive conversations with Product Managers, Engineers, or other designers. The Automation Spectrum is a helpful and visual tool to help align and guide productive conversations about AI.

If you liked this topic, I cannot stress enough how useful Chris’s book can be to understand the process for designing Agents and the nuanced topic of AI. Consider supporting him by buying a copy.

Have a fun week!

-Mat

P.S. What did I miss? How could this be more helpful to you? Hit reply or comment below.

P.P.S Thank you to Ben Gebo for introducing me to King Gizzard & The Lizard Wizard who I featured at the top of this post.